Download Pdf From Website In R

The word "confluence" means the juncture or merging of things, usually rivers. I grew up in West Vir g inia near the confluence of Gauley River (world-class whitewater for you rafters) and Meadow River (a Smallmouth bass fishing haven). The word confluence is also an apt description of how the intellectual currents of my work converge. My work sits at the confluence of statistics, data science, and public policy. Were these rivers, data science would undoubtedly be the smallest. Though it's fashionable to call oneself a "data scientist" these days, a data scientist I am not. I do, however, use many of the tools, techniques, and processes of a data scientist.

I study public policy, and as such, don't have the luxury of data that is of consistent form and measurement (e.g., dollars and cents for you economists). So, one day I am working with machine learning techniques for classifying text, the next I am working on measurement models for these classifications, and thereafter I may be building an extreme events model for some facet related to the data. All this means that I do a LOT of what data scientists, particularly those of the Tidyverse persuasion, call "data wrangling" — cleaning, organizing, and transforming data for analysis.

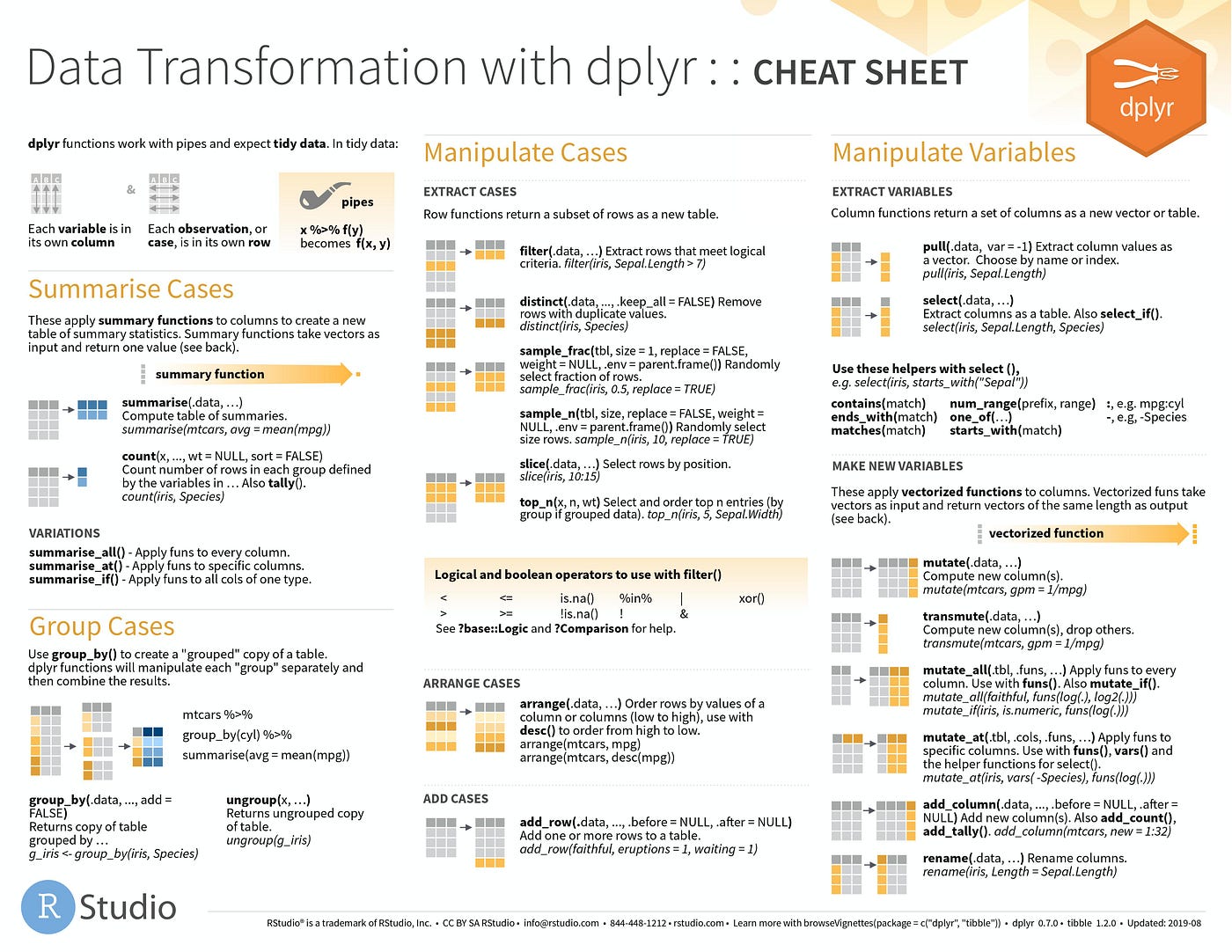

I often forget commands for various things that I need to with data. RStudio provides a great set of Cheatsheets for various packages and processes of data science and statistics. They are in the form of pdf posters like you'd see at a conference. The problem I have is that I am often working from The Lodge in West Virginia, where internet connection can be a challenge with Gauley River's canyon roaring in the background. This means I am often searching and downloading the cheatsheets I think I may need while I am there. Since the cheatsheets are updated from time to time, I run the risk of having a dated one stored locally. This all led me to think about writing a short script that would access all RStudio Cheatsheets and download them locally. On to the code…

Load the Useful Libraries

The tidyverse is the workhorse of these types of operations, at least for those using R. Specifically, rvest is the primary tool for reading and parsing HTML. Finally, stringr provides consistent ways of dealing with text strings-useful when you are scraping lists of URLs.

library(tidyverse)

library(rvest)

library(stringr) SCRAPING LINKS & DOWNLOADING FILES

The line of code below gets the scrape started by identifying and reading the HTML of the Github repository that contains the pdfs of the RStudio Cheatsheets.

page <- read_html("https://www.github.com/rstudio/cheatsheets") If you go to this repository, you'll see that clicking on one of the pdfs in the table doesn't produce the associated cheatsheet alone. It takes us to a second-level page of the repository and opens the file in a code editor. Obtaining the file for reference in your work means clicking the download button on the menu bar at the top of the code editor. And, par for the course, no scraping exercise is ever really simple. To get the file, we need to find a way around "clicking" the download button. To be clear, some scripts would allow us to "click" the button, but those I found deal in jsonlite and are more complicated (though more efficient) than what I do here.

raw_list <- page %>% # takes the page above for which we've read the html

html_nodes("a") %>% # find all links in the page

html_attr("href") %>% # get the url for these links

str_subset("\\.pdf") %>% # find those that end in pdf only

str_c("https://www.github.com", .) %>% # prepend the website to the url

map(read_html) %>% # take previously generated list of urls and read them

map(html_node, "#raw-url") %>% # parse out the 'raw' url - the link for the download button

map(html_attr, "href") %>% # return the set of raw urls for the download buttons

str_c("https://www.github.com", .) %>% # prepend the website again to get a full url

walk2(., basename(.), download.file, mode = "wb") # use purrr to download the pdf associated with each url to the current working directory I leverage the initial scrape of links on the homepage of the repository to access, record, and get a list of the links associated with the download button for each cheatsheet. This workaround for not writing a script that "clicks" the button for each. The script is not the most efficient, but you'll end up with all of the great RStudio Cheatsheets in your working directory locally.

The prepending is important, as each call to read_html needs full URLs. In turn, the parsing in html_nodes and html_attr depend on having read the HTML. The string collapse function str_c from stringr makes this easy. For the parsing, we make use of the map function in Tidyverse package purrr.

Important Tidbits

- In the initial scrape,

str_subset("\\.pdf")tells R to return all the links with pdfs. Otherwise, you get the links for the entire repository, including development files. -

map(html_node, "#raw-url")tells R to look for the URL associated with the download button for each cheatsheet. You identify this tag by using Google's Selector Gadget — search it for examples and how to identify tags. -

purrr::walk2applies thedownload.filefunction to each of the generated raw links. The "." tells it to use the data from the previous command (the raw URLs).basename(.)tells it to give the downloaded file the basename of the URL (e.g., "purrr.pdf"). - Depending on your pdf reader, you may need to add

mode = "wb". Otherwise the files may appear blank, corrupt, or not render properly. See the documentation for thedownload.file()command for more information.

Old School

Note that walk2 and many of these other great functions from the Tidyverse are not necessary per se. The for-loop below is an old-school implementation of what walk2 is doing above. Here, download.file is applied to each URL in raw_list (url is just a tag here for each line in the object raw_list).

for (url in raw_list){ download.file(url, destfile = basename(url), mode = "wb") } Wrapping Up

So, this is not the most efficient code for scraping where there is a need for "button-clicking", but it gets the job done with minimal packages and knowledge of json or other languages. Now that we're done, I can also say that the Cheatsheets are available at RStudio's resource page. There, they would be an easier scrape, and of course you can click and download. I reference them often to students as they are a great resource in teaching data analysis and statistics.

Source: https://towardsdatascience.com/scraping-downloading-and-storing-pdfs-in-r-367a0a6d9199

Posted by: elmerelmerhummingbirde0277863.blogspot.com

Post a Comment for "Download Pdf From Website In R"